Back-end Services (Riders of Asgard)

In my last article, I spoke about game analytics and how I am using them for Riders of Asgard. In this article I am going to speak about the back-end services and how I went about implementing them.

The back-end system for Riders of Asgard is primarily responsible for the leader boards system and the hints and tips that are displayed on the loading screens. Furthermore it handles the sharing of high scores and Tweets the high scores to the official Riders of Asgard Twitter page (@ridersofasgard). It also periodically does a geo-lookup on the IP Address of a player and sets the country flag accordingly, as can be seen in the screen shot below.

Core Web Service

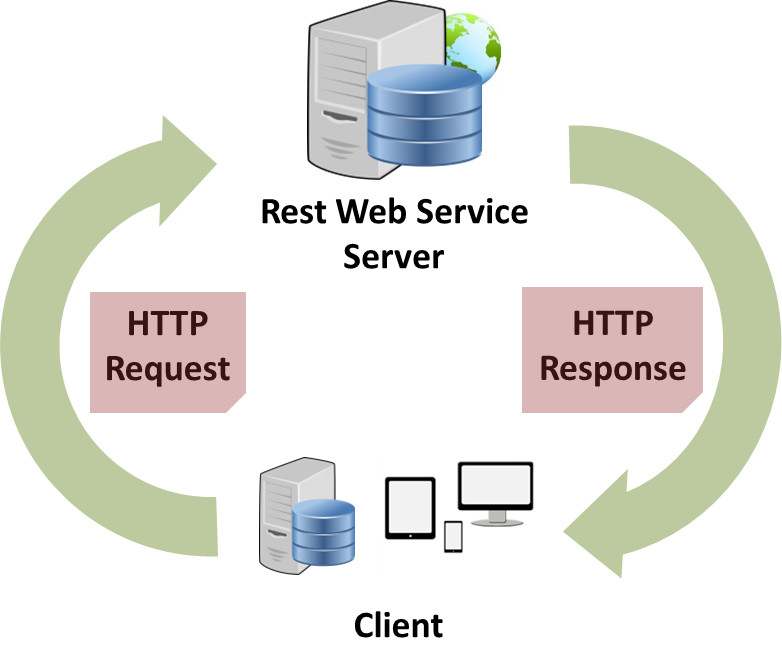

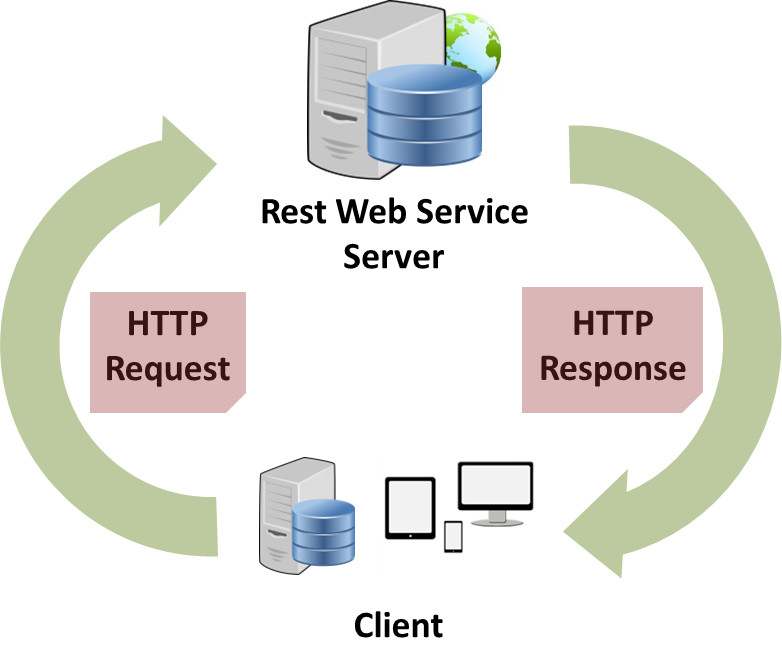

The core of the back-end service is a RESTful web-service built using Java and Spring Boot. Spring Boot is part of the Spring Framework which is currently owned by Pivotal. Spring Boot makes it easy to create stand-alone, production-grade Spring based Applications that you can "just run". We take an opinionated view of the Spring platform and third-party libraries so you can get started with minimum fuss. Most Spring Boot applications need very little Spring configuration.

Included with the base Spring Boot application, I am using Data JPA with the Repository Pattern, Thymeleaf, Web, and Web Jars. All these are connected to a MySQL server. Spring Data’s mission is to provide a familiar and consistent, Spring-based programming model for data access while still retaining the special traits of the underlying data store.

It makes it easy to use data access technologies, relational and non-relational databases, map-reduce frameworks, and cloud-based data services. This is an umbrella project which contains many sub projects that are specific to a given database. The projects are developed by working together with many of the companies and developers that are behind these exciting technologies. Coupled with the Repository Pattern means that the service is able to scale with the system as the project grows, MySQL no longer able to cope, no problem add the PostreSQL connector and restart the application.

The Repository Pattern allows the repository to mediate between the data source layer and the business layers of the application. It queries the data source for the data, maps the data from the data source to a business entity, and persists changes in the business entity to the data source. A repository separates the business logic from the interactions with the underlying data source or Web service.

Spring Web forms the basis of the web-service and is tightly coupled with WebJars. WebJars are client-side web libraries (e.g. jQuery & Bootstrap) packaged into JAR (Java Archive) files which allow us to explicitly and easily manage the client-side dependencies in JVM-based web applications.

Thymeleaf is a Java XML/XHTML/HTML5 template engine that can work both in web (Servlet-based) and non-web environments. It is better suited for serving XHTML/HTML5 at the view layer of MVC-based web applications, but it can process any XML file even in offline environments. It provides full Spring Framework integration. In web applications Thymeleaf aims to be a complete substitute for JSP, and implements the concept of Natural Templates: template files that can be directly opened in browsers and that still display correctly as web pages.

Back-end Service

The foundation of the back-end service is an Ubuntu droplet running on Digital Ocean. DigitalOcean, Inc. is an American cloud infrastructure provider headquartered in New York City with data centers worldwide. DigitalOcean provides developers cloud services that help to deploy and scale applications that run simultaneously on multiple computers. As of December 2015, DigitalOcean was the second largest hosting company in the world in terms of web-facing computers.

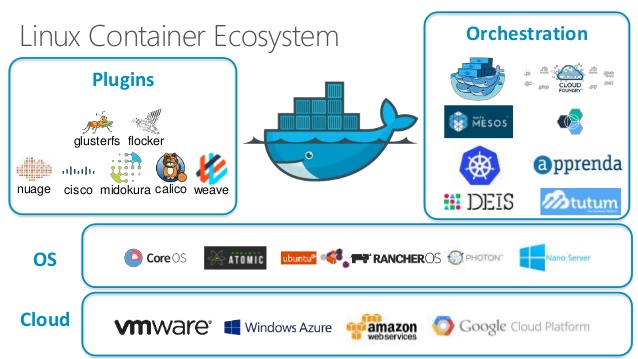

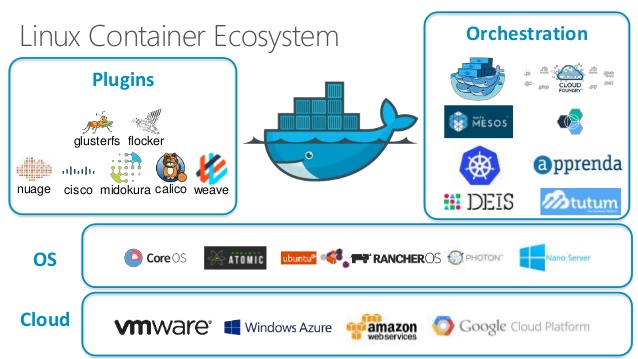

On top of our droplet we have deployed Docker containers for Java and MySQL that run the Spring Boot Web-service and MySQL database respectively. Docker is an open-source project that automates the deployment of applications inside software containers. Docker containers wrap up a piece of software in a complete file system that contains everything it needs to run: code, run time, system tools, system libraries – anything you can install on a server. This guarantees that it will always run the same, regardless of the environment it is running in.

Docker provides an additional layer of abstraction and automation of operating-system-level virtualization on Linux. Docker uses the resource isolation features of the Linux kernel such as cgroups and kernel namespaces, and a union-capable file system such as OverlayFS and others to allow independent "containers" to run within a single Linux instance, avoiding the overhead of starting and maintaining virtual machines.

This abstraction allows us to run multiple web-services and databases on the single droplet if required and when necessary we can scale up the droplet seamlessly without it affecting the individual systems running in their Docker containers. Furthermore we can move the containers to separate servers if required and if a container gets compromised or unstable, it will not effect the other containers on the system.

Continuous Integration

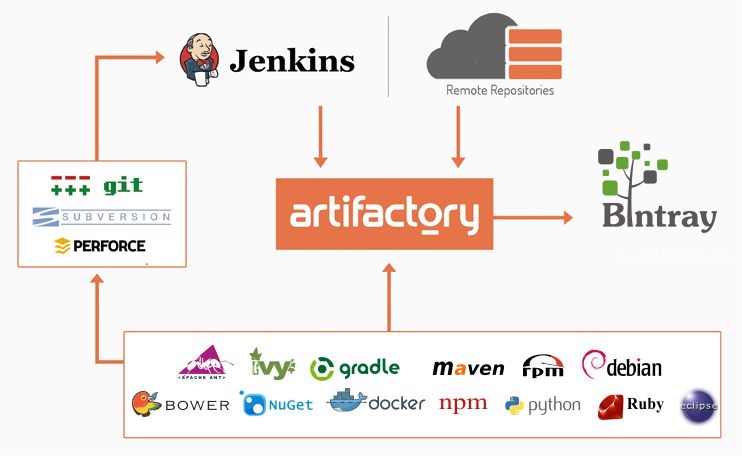

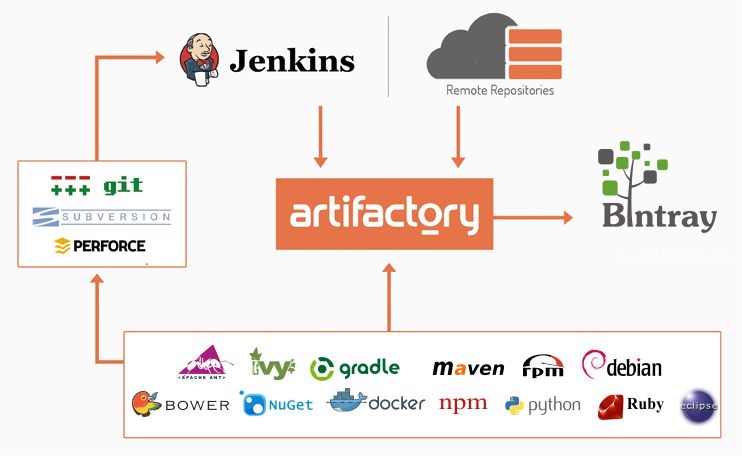

The entire system is designed with Continuous Integration in mind and is driven from the DevOps mindset. In software engineering, continuous integration (CI) is the practice of merging all developer working copies to a shared mainline several times a day. Grady Booch first named and proposed CI in his 1991 method, although he did not advocate integrating several times a day. Extreme programming (XP) adopted the concept of CI and did advocate integrating more than once per day - perhaps as many as tens of times per day. We use a combination of Jenkins and Artificatory for our system.

Jenkins helps to automate the non-human part of the whole software development process, with now common things like continuous integration, but by further empowering teams to implement the technical part of a Continuous Delivery. It is a server-based system running in a servlet container such as Apache Tomcat. It supports SCM tools including AccuRev, CVS, Subversion, Git, Mercurial, Perforce, Clearcase and RTC, and can execute Apache Ant and Apache Maven based projects as well as arbitrary shell scripts and Windows batch commands. The creator of Jenkins is Kohsuke Kawaguchi. Released under the MIT License, Jenkins is free software.

As the first, and only, universal Artifact Repository Manager on the market, JFrog Artifactory fully supports software packages created by any language or technology. Artifactory is the only enterprise-ready repository manager available today, supporting secure, clustered, High Availability Docker registries. Integrating with all major CI/CD and DevOps tools, Artifactory provides an end-to-end, automated and bullet-proof solution for tracking artifacts from development to production.

All the Continious Integration is driven from our Gitlab Community Edition server. GitLab is a web-based Git repository manager with wiki and issue tracking features, using an open source license, developed by GitLab Inc. The software was written by Dmitriy Zaporozhets and Valery Sizov from Ukraine. The code is written in Ruby. Later, some parts have been rewritten in Go. As of Dec 2016, the company has 150 team members and more than 1400 open source contributors. It is used by organisations such as IBM, Sony, Jülich Research Center, NASA, Alibaba, Invincea, O’Reilly Media, Leibniz-Rechenzentrum (LRZ) and CERN.

Part of our DevOps process is the abundant use of Unit Tests and Integration Testing that is run on Jenkins using the Maven build tool. On a successful build, the relevant Docker Container is informed that there is an update and it will schedule the update when the system is not being utilised. Should the system end up being more than three versions behind, we will manually schedule the upgrade by bringing up a brand new Docker container with the new version and decommissioning the current one.

DevOps (a clipped compound of development and operations) is a term used to refer to a set of practices that emphasizes the collaboration and communication of both software developers and other information-technology (IT) professionals while automating the process of software delivery and infrastructure changes. It aims at establishing a culture and environment where building, testing, and releasing software can happen rapidly, frequently, and more reliably.

In computer programming, unit testing is a software testing method by which individual units of source code, sets of one or more computer program modules together with associated control data, usage procedures, and operating procedures, are tested to determine whether they are fit for use. Integration testing (sometimes called integration and testing, abbreviated I&T) is the phase in software testing in which individual software modules are combined and tested as a group. It occurs after unit testing and before validation testing. Integration testing takes as its input modules that have been unit tested, groups them in larger aggregates, applies tests defined in an integration test plan to those aggregates, and delivers as its output the integrated system ready for system testing.

Maven is a build automation tool used primarily for Java projects. The word maven means "accumulator of knowledge" in Yiddish. Maven addresses two aspects of building software: first, it describes how software is built, and second, it describes its dependencies. Contrary to preceding tools like Apache Ant, it uses conventions for the build procedure, and only exceptions need to be written down. An XML file describes the software project being built, its dependencies on other external modules and components, the build order, directories, and required plug-ins. It comes with pre-defined targets for performing certain well-defined tasks such as compilation of code and its packaging.

Conclusion

So some of you reading this may have noticed a trend towards Java and Open Source solutions, this was a conscious decision that we made. I have always advocated for Java and Open Source solutions in the enterprise and my drive in Gobbo Games is to show that these technologies can be used in an enterprise without the need to pay exorbitant license fees.

The back-end system for Riders of Asgard is primarily responsible for the leader boards system and the hints and tips that are displayed on the loading screens. Furthermore it handles the sharing of high scores and Tweets the high scores to the official Riders of Asgard Twitter page (@ridersofasgard). It also periodically does a geo-lookup on the IP Address of a player and sets the country flag accordingly, as can be seen in the screen shot below.

Core Web Service

The core of the back-end service is a RESTful web-service built using Java and Spring Boot. Spring Boot is part of the Spring Framework which is currently owned by Pivotal. Spring Boot makes it easy to create stand-alone, production-grade Spring based Applications that you can "just run". We take an opinionated view of the Spring platform and third-party libraries so you can get started with minimum fuss. Most Spring Boot applications need very little Spring configuration.

Included with the base Spring Boot application, I am using Data JPA with the Repository Pattern, Thymeleaf, Web, and Web Jars. All these are connected to a MySQL server. Spring Data’s mission is to provide a familiar and consistent, Spring-based programming model for data access while still retaining the special traits of the underlying data store.

It makes it easy to use data access technologies, relational and non-relational databases, map-reduce frameworks, and cloud-based data services. This is an umbrella project which contains many sub projects that are specific to a given database. The projects are developed by working together with many of the companies and developers that are behind these exciting technologies. Coupled with the Repository Pattern means that the service is able to scale with the system as the project grows, MySQL no longer able to cope, no problem add the PostreSQL connector and restart the application.

The Repository Pattern allows the repository to mediate between the data source layer and the business layers of the application. It queries the data source for the data, maps the data from the data source to a business entity, and persists changes in the business entity to the data source. A repository separates the business logic from the interactions with the underlying data source or Web service.

Spring Web forms the basis of the web-service and is tightly coupled with WebJars. WebJars are client-side web libraries (e.g. jQuery & Bootstrap) packaged into JAR (Java Archive) files which allow us to explicitly and easily manage the client-side dependencies in JVM-based web applications.

Thymeleaf is a Java XML/XHTML/HTML5 template engine that can work both in web (Servlet-based) and non-web environments. It is better suited for serving XHTML/HTML5 at the view layer of MVC-based web applications, but it can process any XML file even in offline environments. It provides full Spring Framework integration. In web applications Thymeleaf aims to be a complete substitute for JSP, and implements the concept of Natural Templates: template files that can be directly opened in browsers and that still display correctly as web pages.

Back-end Service

The foundation of the back-end service is an Ubuntu droplet running on Digital Ocean. DigitalOcean, Inc. is an American cloud infrastructure provider headquartered in New York City with data centers worldwide. DigitalOcean provides developers cloud services that help to deploy and scale applications that run simultaneously on multiple computers. As of December 2015, DigitalOcean was the second largest hosting company in the world in terms of web-facing computers.

On top of our droplet we have deployed Docker containers for Java and MySQL that run the Spring Boot Web-service and MySQL database respectively. Docker is an open-source project that automates the deployment of applications inside software containers. Docker containers wrap up a piece of software in a complete file system that contains everything it needs to run: code, run time, system tools, system libraries – anything you can install on a server. This guarantees that it will always run the same, regardless of the environment it is running in.

Docker provides an additional layer of abstraction and automation of operating-system-level virtualization on Linux. Docker uses the resource isolation features of the Linux kernel such as cgroups and kernel namespaces, and a union-capable file system such as OverlayFS and others to allow independent "containers" to run within a single Linux instance, avoiding the overhead of starting and maintaining virtual machines.

This abstraction allows us to run multiple web-services and databases on the single droplet if required and when necessary we can scale up the droplet seamlessly without it affecting the individual systems running in their Docker containers. Furthermore we can move the containers to separate servers if required and if a container gets compromised or unstable, it will not effect the other containers on the system.

Continuous Integration

The entire system is designed with Continuous Integration in mind and is driven from the DevOps mindset. In software engineering, continuous integration (CI) is the practice of merging all developer working copies to a shared mainline several times a day. Grady Booch first named and proposed CI in his 1991 method, although he did not advocate integrating several times a day. Extreme programming (XP) adopted the concept of CI and did advocate integrating more than once per day - perhaps as many as tens of times per day. We use a combination of Jenkins and Artificatory for our system.

Jenkins helps to automate the non-human part of the whole software development process, with now common things like continuous integration, but by further empowering teams to implement the technical part of a Continuous Delivery. It is a server-based system running in a servlet container such as Apache Tomcat. It supports SCM tools including AccuRev, CVS, Subversion, Git, Mercurial, Perforce, Clearcase and RTC, and can execute Apache Ant and Apache Maven based projects as well as arbitrary shell scripts and Windows batch commands. The creator of Jenkins is Kohsuke Kawaguchi. Released under the MIT License, Jenkins is free software.

As the first, and only, universal Artifact Repository Manager on the market, JFrog Artifactory fully supports software packages created by any language or technology. Artifactory is the only enterprise-ready repository manager available today, supporting secure, clustered, High Availability Docker registries. Integrating with all major CI/CD and DevOps tools, Artifactory provides an end-to-end, automated and bullet-proof solution for tracking artifacts from development to production.

All the Continious Integration is driven from our Gitlab Community Edition server. GitLab is a web-based Git repository manager with wiki and issue tracking features, using an open source license, developed by GitLab Inc. The software was written by Dmitriy Zaporozhets and Valery Sizov from Ukraine. The code is written in Ruby. Later, some parts have been rewritten in Go. As of Dec 2016, the company has 150 team members and more than 1400 open source contributors. It is used by organisations such as IBM, Sony, Jülich Research Center, NASA, Alibaba, Invincea, O’Reilly Media, Leibniz-Rechenzentrum (LRZ) and CERN.

Part of our DevOps process is the abundant use of Unit Tests and Integration Testing that is run on Jenkins using the Maven build tool. On a successful build, the relevant Docker Container is informed that there is an update and it will schedule the update when the system is not being utilised. Should the system end up being more than three versions behind, we will manually schedule the upgrade by bringing up a brand new Docker container with the new version and decommissioning the current one.

DevOps (a clipped compound of development and operations) is a term used to refer to a set of practices that emphasizes the collaboration and communication of both software developers and other information-technology (IT) professionals while automating the process of software delivery and infrastructure changes. It aims at establishing a culture and environment where building, testing, and releasing software can happen rapidly, frequently, and more reliably.

In computer programming, unit testing is a software testing method by which individual units of source code, sets of one or more computer program modules together with associated control data, usage procedures, and operating procedures, are tested to determine whether they are fit for use. Integration testing (sometimes called integration and testing, abbreviated I&T) is the phase in software testing in which individual software modules are combined and tested as a group. It occurs after unit testing and before validation testing. Integration testing takes as its input modules that have been unit tested, groups them in larger aggregates, applies tests defined in an integration test plan to those aggregates, and delivers as its output the integrated system ready for system testing.

Maven is a build automation tool used primarily for Java projects. The word maven means "accumulator of knowledge" in Yiddish. Maven addresses two aspects of building software: first, it describes how software is built, and second, it describes its dependencies. Contrary to preceding tools like Apache Ant, it uses conventions for the build procedure, and only exceptions need to be written down. An XML file describes the software project being built, its dependencies on other external modules and components, the build order, directories, and required plug-ins. It comes with pre-defined targets for performing certain well-defined tasks such as compilation of code and its packaging.

Conclusion

So some of you reading this may have noticed a trend towards Java and Open Source solutions, this was a conscious decision that we made. I have always advocated for Java and Open Source solutions in the enterprise and my drive in Gobbo Games is to show that these technologies can be used in an enterprise without the need to pay exorbitant license fees.

artifactory.jpg

742 x 456 - 83K

Docker.jpg

638 x 359 - 66K

rest.jpg

782 x 670 - 48K

RoA.PNG

1616 x 938 - 2M

Comments

How are you going to stop people submitting fake data?

I thought I remembered you using ASP.NET for previous things? If so, what would be the reason to not use it here? .NET Core and MVC are open source, and you can host it on non-Windows things.

Each session has a unique ID and this is generated when the round starts, only one score can be submitted for each unique session. We also have a built in encryption system (that I wrote inside of Unreal Engine) that uses the unique session ID as well as a salt value and the player name and this is decrypted on the back-end and validated against a checksum to ensure the data is valid and has not been changed.

Finally we also use an SSL connection to the web-service as well as a built-in anti-cheat system that prevents memory changers from modifying values sent to the web-service.

I do use ASP.NET for my normal day job but have never used it for anything for Gobbo Games. I am a Java developer and have been developing in Java since 1997 (I only develop in C# because my current job requires it) and I have always used Open Source (Linux, Java, etc) in all my enterprise development.

I have found that the overhead from attempting to run ASP.NET MVC and stuff on non-windows hardware is too high (Mono is primarily responsible here) and if you do want to use it you are kind of limited to AWS or Azure and the costs for an Indie can be prohibitive, although not always.

I also prefer the Maven Build tool to something like NuGet, it is more flexible and works seamlessly with my Continuous Integration systems like Jenkins and Artifactory.

p.s. This is my opinion and is based on my experience with Enterprise Development using both Windows and Linux and their corresponding technologies.

Anyone who goes through the effort of doing this to get a high score on a leader board would be identified quite quickly and we are able to ban and prevent future entries on IP Address and Player Name.

The leader board is only ever saved at the end of the run due to the way the game modes work, it would not be practical to do it the way you recommended.

Let me just stress that this design choice was made because of the nature of the leader board, if this was an MMO or a MOBA that had more at stake then it would be a completely different situation. The saving would be handed off to the server which would then talk to the web-service. The server code would not be available and there would be other constraints and restrictions put in place to prevent reverse engineering.

Having said this, this is not what you article is about. Two things I would like to know is:

- How did you find writing unit tests in UE4? Does it handle it well

- Have you stress tested your service? If a call to the service fails, how are you handling it so that it doesnt impact the player?

Most of the Unit Tests were for the Java back-end services and these are relatively simple to do. The are no Unit Tests for UE4 as most of the web-service code is done in Blueprints and this is almost impossible to unit test ... but by ensuring the web-service has comprehensive unit tests and the clear decoupling of the service from the game ... it is just as good.

Stress testing has been done using jMeter and the system is based on an architecture that I have implemented for another company. This architecture currently serves over 3000 requests per second and we are easily able to scale this should it be necessary.

Fail over is handled through HAProxy with a load balanced solution (not something I mentioned in this article) but the requests made from the game client to the web-service are made asynchronously. Now once again, this was a conscious design decision because of the nature of the system and that fact it is a low risk feature.

If I was doing something like a MOBA or MMO and storing player stats or inventory or something critical, I would then implement one of two solutions.

Fire and Forget Messaging System - small payload message sent to a message queue like ActiveMQ and ave a server process that. The processing server would use consumer pools so that it would be able to cope with high volumes. This is similar to an architecture I have implemented for remote system monitoring solution which currently monitors over 5000 devices country wide and receives heartbeat status messages every 60 seconds.

Synchronous System - this would be indicated by a progress indicator in the game or something to so that some form of updating is taking place, almost like a save game system.

The use of these types of systems would depend on the scenario and the requirements, there could also be other solutions like encrypted save files or binary save files or almost anything solution.

The main idea behind the article was to shed some light on the back-end services for Riders of Asgard and what technologies were used. The technologies were chosen because of the nature of the feature and was evaluated against amount of work versus risk involved. The entire system was developed using RAD (Rapid Application Development) and the base web-service took one evening to write.

All the work done on these services are based on proven architecture and principles that I apply to my work daily (yeah I have a day job other than Gobbo Games) and these systems are all enterprise systems.