Facing Billboards Shader in Unity

So for a recent project I wanted to be able to have a holographic 3D star map with the stars as individual GameObjects which would be simple Quads with a star texture applied that always faces the camera.

The first result that comes up in Google is to use a transform.LookAt inside each GameObject's Update() function which is just plain horrible and unnecessary. I know that I wanted to do it with a Vertex Shader because that's what they're for. After some further digging I found some code:

Basically what it's doing is removing any rotation of the model around it's center, so that it's always facing dead on.

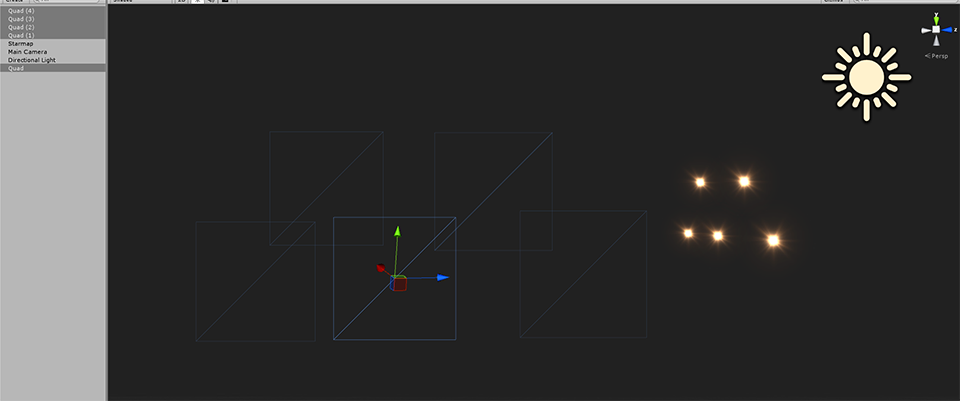

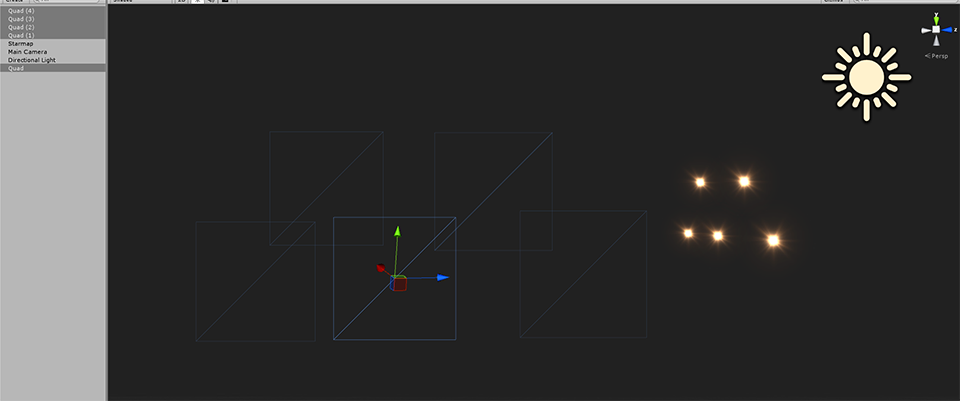

but I had some extremely weird artefacts:

The wireframe of each Quad looks correct, but the actual rendered flare is way off to the side and it swings around wildly as the camera rotates. Worse, it seems to jump randomly depending on where the camera is and where it's facing.

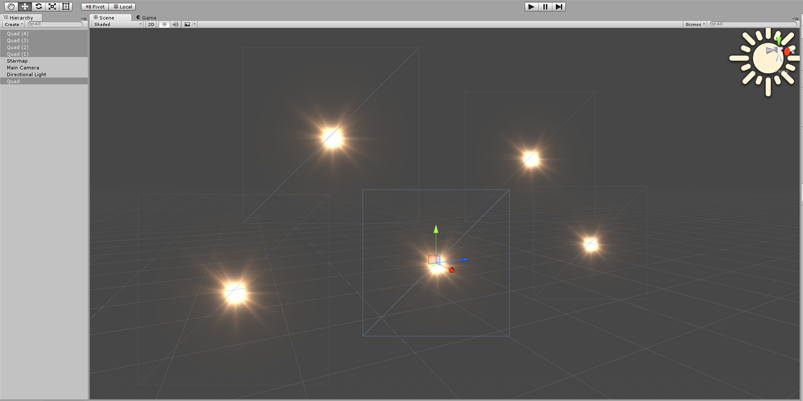

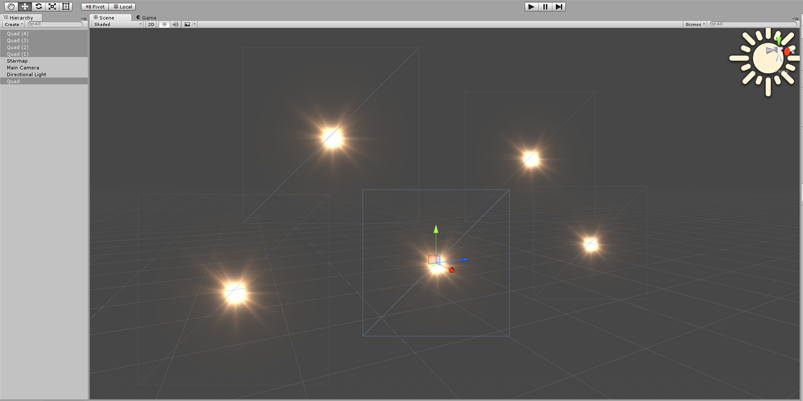

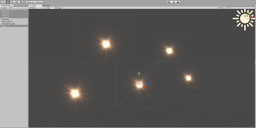

After some experimentation and thought, I hit upon the answer: Unity batches geometry together into one big object to render more efficiently. But then the center of the object is no longer the center of each flare, but the combined center of the entire group. Some quick searching and adding a Tag to the subshader fixed this completely!

The shader can probably be optimised by just multiplying the ModelView matrix by a vector, but I'm just happy it works for now!

Note: Because we're turning off batching, this does make the rendering a little less efficient. You may run into issues with it if you apply it to thousands of objects in your scene at once.

I've attached the .shader file if anyone needs it.

The first result that comes up in Google is to use a transform.LookAt inside each GameObject's Update() function which is just plain horrible and unnecessary. I know that I wanted to do it with a Vertex Shader because that's what they're for. After some further digging I found some code:

vertexOutput vert(vertexInput input)

{

vertexOutput output;

float4x4 mv = UNITY_MATRIX_MV;

// First colunm.

mv._m00 = 1.0f;

mv._m10 = 0.0f;

mv._m20 = 0.0f;

// Second colunm.

mv._m01 = 0.0f;

mv._m11 = 1.0f;

mv._m21 = 0.0f;

// Thrid colunm.

mv._m02 = 0.0f;

mv._m12 = 0.0f;

mv._m22 = 1.0f;

output.vertex = mul(UNITY_MATRIX_P, mul(mv, input.vertex));

output.tex = input.tex;

output.color = input.color * _Color;

return output;

}Basically what it's doing is removing any rotation of the model around it's center, so that it's always facing dead on.

but I had some extremely weird artefacts:

The wireframe of each Quad looks correct, but the actual rendered flare is way off to the side and it swings around wildly as the camera rotates. Worse, it seems to jump randomly depending on where the camera is and where it's facing.

After some experimentation and thought, I hit upon the answer: Unity batches geometry together into one big object to render more efficiently. But then the center of the object is no longer the center of each flare, but the combined center of the entire group. Some quick searching and adding a Tag to the subshader fixed this completely!

Tags { "DisableBatching"="True" }

The shader can probably be optimised by just multiplying the ModelView matrix by a vector, but I'm just happy it works for now!

Note: Because we're turning off batching, this does make the rendering a little less efficient. You may run into issues with it if you apply it to thousands of objects in your scene at once.

I've attached the .shader file if anyone needs it.

Untitled-1.png

960 x 401 - 52K

Untitled-2.png

803 x 401 - 113K

zip

zip

Billboard.zip

3K

Comments

Again, I'm not sure, but this is based on some results some colleagues have told me in the past, so if you're doing this as an optimization thing you might want to do some tests to check what the difference actually is.

Something else you could try is to use a ParticleSystem, and spawn where you want the particles to be. You get the billboarding already in the ParticleSystem (whatever Unity does to get that working), and they still batch. You can set the particle lifetime to the largest it can go (which is 100000 I think, which is still around 27 hours), and if your game really needs to run for longer than that, you can clear+respawn the system every 24 hours or whatever.

Getting a billboard shader working with batching sounds like it'd be pretty useful. You wouldn't able to work with the object centre anymore, but perhaps there's some other reference point you can use to remember their positions... not sure how, whether it's a 3D texture, or a dx11 geometry shader; haven't really played with either of those much.

This inspired me to try out the geometry shader approach... I managed to find a billboard geo shader that someone else had written, and cobbled up a script to generate a point-cloud which the shader uses to the to generate the billboards.

It (sort of) appears to work... it generates 1 draw call for an arbitrary amount of sprites. assuming you put some fancy animation in the shader, you can probably get quite an efficient result.

I have attached a unity package with the code + shader (only tested this on unity 4) and I think ( it requires DX 11

renderheads.com/temp/billboard_geo_shader.unitypackage

Ah yes, this method is pretty cool for large datasets - it generates the quads inside the shader - one quad per vertex, so you can take advantage of batching and should be slightly more efficient than sending thousands of vertexes from Unity itself.

The downsides are that it's not as simple to set up - you need to create a custom mesh with the vertexes placed where you want them, and they are not trivial to move/update. It's not a good choice for my starmap, but it would suit something like pointcloud data visualisation very well. Nice!

I tested the CPU billboards with each billboard having a component which calls transform.LookAt() in the Update() function - I'm not looking at cases where you could cheat and just rotate a parent transform to look at the camera.

First up the Shader Billboards:

80 FPS with the render thread doing 5ms worth of work having to process 10001 batches.

When the camera is looking at only one or two of the billboards, things get hugely more efficient and the framerate jumps up to 500 FPS.

Now the CPU Billboards:

The framerate almost halves to 48 FPS. The render thread is now 3ms faster because it's only having to work on 3 batches, but the CPU is now taking an extra 9ms to process all those LookAt() commands.

Worse, when the camera isnt looking at all the billboards, the CPU is still processing all those LookAt() commands, so the framerate only increases to 120 FPS (as opposed to 500 FPS for the shader). Also, because the quads are looking at the center of the camera, when they get extremely close, they start getting clipped by the near Z plane.

Took me a while to understand what you were meaning, but I went back and put all the billboard's Transforms into a large array which is run through in one component's Update() event and it improved dramatically, but it's still nowhere near the performance of the shaders:

Now we're getting 60 FPS, the CPU time is still 5ms slower than with the shader (better than 9ms previously). And when looking away from the particles, the framerate tops out at 160 FPS.

I think the conclusion you can draw from this is that you needn't worry about the excessive draw calls with using the billboard shader if you're doing something small and sensible with them ( < 10,000 billboards). If you have over that amount, I'd use @shanemark's implementation above - I still would not use the CPU lookAt() unless I had no other choice.

I've personally only dabbled in DX11 shaders because of interest, and have generally avoided them because of my impression that a relatively small % of players actually have DX11 cards and that adoption's been quite slow (though perhaps that's poor foresight on my part).

[edit] What are your PC specs, by the way (cpu/gpu)? I've been told before (albeit about 2 years ago, when ~150 draw calls was the limit for most mobile hardware, also this may have been on the Unity forums where accurate information's not exactly common...) that ~2k draw calls was PC territory. I'm keen to know where 10k+ falls in terms of low, medium or high end.

All the tests above were done on a i7 4770k with 16gb ram and a geforce 780ti. So it would be interesting to see how much worse it would be on a laptop or mobile device. You would probably have to scale back quite a bit. My use case for these will be a starmap of around 50 to 60 billboards so within mobile territory. In any case, I'm confident that the shader will always outperform a similar look at() setup.

A little bit of background first:

So the way I understand it is (and I'm no expert on the subject), that there are three different transform matrices that are used to get the final position of the vertex on the screen. As we know, a matrix stores position and rotation and scale all in one.

- Your model / vertex has a position, rotation and scale in the world (Model matrix)

- Your camera has it's own position, rotation and scale in the world (View matrix)

- Your camera has a lens which determines the zoom and fov (Projection matrix)

Now you just multiply all three together and then multiply by your input vertex to get the output vertex.Unity provides some constants that are helpful here:

So to answer your question, I'm taking the UNITY_MATRIX_MV, which is the model matrix multiplied by the view matrix and then I'm erasing the rotation information thats already been set by how the camera and model are positioned in the world by setting the top left 3x3 block to the identity matrix. This leaves the position information intact (unfortunately it also destroys the scale information but I'm working on that.)

So in effect it's deleting the original information out of the model and view matrix to leave the billboard with a screen-space rotation of zero - ie. just facing dead-on.

Then you just need to run it through the projection matrix to account for the camera lens' zoom level, and you get your output vertex.

Hope I got the idea across, let me know if something isnt clear.

Besides solving that, if I am able to tweak the shader enough to make it handle transparency, them it would solve pretty much all problems I've been having so far to implement the "particle-based" background of the scale I've been trying to.

To add scale to the transformation matrix while still stripping out the rotation, you just need to get the length of each row of the matrix, and use those instead of the identity matrix.

So this:

becomes this:

And if you have a DX11 (or equivalent) GPU, you can use GPU instancing on these too.